High-performance computing (HPC) refers to a number of processors (as part of a single machine) or several computers (as a single computing resource) organized in a cluster. The computing system and environment. There are many types of HPC systems, ranging from large clusters of standard computers to highly dedicated hardware.

This article is based on & ldquo; Summary of high-performance computing knowledge [Website: https://mp.weixin.qq.com/s/P0y-e0Cb8eSzJIlFbUFyEg]”及“OpenMP编译原理及实现[网址:https://mp.weixin.qq.com/s/P0y-e0Cb8eSzJIlFbUFyEg]”,高性能计算应用特征剖析[网址:https://mp.weixin.qq.com/s/P0y-e0Cb8eSzJIlFbUFyEg]、超级计算机研究报告、[网址:https://mp.weixin.qq.com/s/P0y-e0Cb8eSzJIlFbUFyEg]深度报告:GPU研究框架、[网址:https://mp.weixin.qq.com/s/P0y-e0Cb8eSzJIlFbUFyEg]《高性能计算和超算专题 》. [Website: https://mp.weixin.qq.com/s/P0y-e0Cb8eSzJIlFbUFyEg]

most cluster-based HPC systems use high-performance network interconnection, such as those from InfiniBand or Myrinet. The basic network topology and organization can use a simple bus topology. In an environment with high performance, the mesh network system provides a short incubation period between hosts, so the overall network performance and transmission rate can be improved.

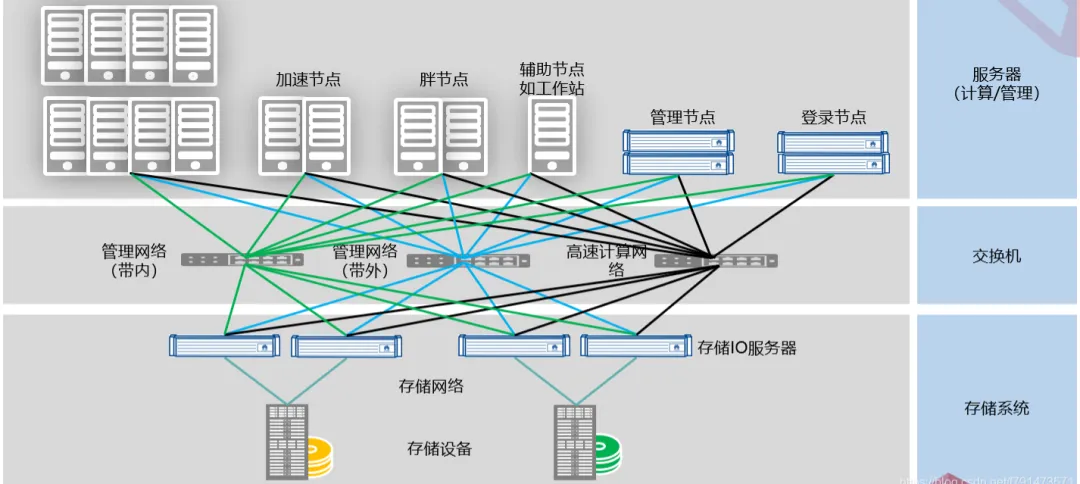

High-performance computing hardware architecture and overall architecture

high-performance computing topology. In terms of hardware structure, a high-performance computing system consists of computing nodes, IO nodes, logon nodes, management nodes, high-speed networks, and storage systems.

Performance metrics for high-performance computing clusters

FLOPS refers to the number of floating-point operations per second. Flops is used as an evaluation factor for computer computing capability. The theoretical performance of a high-performance computing cluster can be calculated based on hardware configurations and parameters.

1) CPU theoretical performance calculation method (taking Intel CPU as an example)

single precision: dominant frequency * (vector bit width/32)* 2 double precision: dominant frequency * (vector bit width/64)* 22 represents the product instruction

2) GPU theoretical performance calculation method (taking NVIDIA GPU as an example)

single precision: Command throughput * Number of operation units * frequency

3) MIC theoretical performance calculation method (taking Intel MIC as an example)

single precision: main frequency * (vector bit width/32)* 2 double precision: main frequency * (vector bit width/64)* 22 represents the product instruction to evaluate the overall computing capability of the system by using the test program.

Linapck test: the principal element Gaussian elimination method is used to solve the double-precision dense linear algebraic equations. The results are expressed by the number of floating-point operations per second (flops).

HPL: The test for large-scale parallel computing systems, named HighPerformanceLinpack(HPL), is the first standard public version of the parallel Linpack test software package. Used to rank the top 500 and top 100 in China.

Homogeneous computing node

A compute node in a cluster consists of CPU computing resources. Currently, a compute node can support single-channel, dual-channel, four-channel, and eight-channel CPU computing nodes.

Heterogeneous computing nodes

heterogeneous computing technology came into being in the mid-1980s. Due to its high-performance computing capability, good scalability, high utilization rate of computing resources, and huge development potential, at present, it has become one of the research hotspots in the field of parallel/distributed computing. Heterogeneous computing is generally designed to accelerate and save energy. Currently, mainstream heterogeneous computing includes CPU GPU,CPU MIC, and CPU FPGA.

Common parallel file systems

PVFS: The Parallel Virtual File System (PVFS) project of Clemson University is used to create an open source parallel file system for PC clusters running Linux operating systems. PVFS has been widely used as the infrastructure for temporary storage of high-performance large file systems and parallel I/O research. As a parallel file system, PVFS stores data in existing file systems of multiple cluster nodes, and multiple clients can access the data at the same time.

Lustre, a parallel distributed file system, is usually used in large computer clusters and supercomputers. Lustre is a mixed word derived from Linux and Cluster. Lustre 1.0 was released in 2003. Use GNU GPLv2 to open source code authorization.

Main functions of cluster management system

at present, several mainstream server manufacturers have provided their own Cluster management systems, such as Inspur's Cluster Engine, Dawn's Gridview,HP's Serviceguard,IBM Platform Cluster Manager, etc. The cluster management system provides the following functions:

1) monitoring module: monitors the running status of nodes, networks, files, power supplies, and other resources in the cluster. Dynamic Information, live information, historical information, and node monitoring. You can monitor the running status and parameters of the entire cluster.

2) User Management Module: Manages user groups and users of the system. You can view, add, delete, and edit user groups and users.

3) network management module: network management in the system.

4) file management: manage files on nodes. You can upload, create, open, copy, paste, rename, package, delete, and download files.

5) Power Management Module: automatic and off of the system, etc.

6) job submission and management module: submit a new job, view the job status in the system, and perform operations such as executing and deleting the job. You can also view job execution logs.

7) friendly graphical interactive interface: The current cluster management system provides graphical interactive interfaces to make it easier to use and manage clusters.

Cluster job scheduling system

as for the current mainstream HPC job scheduling systems: LSF/Slurm/PBS/SGE, they also have some derivative versions, so some people also call them the four major schools.

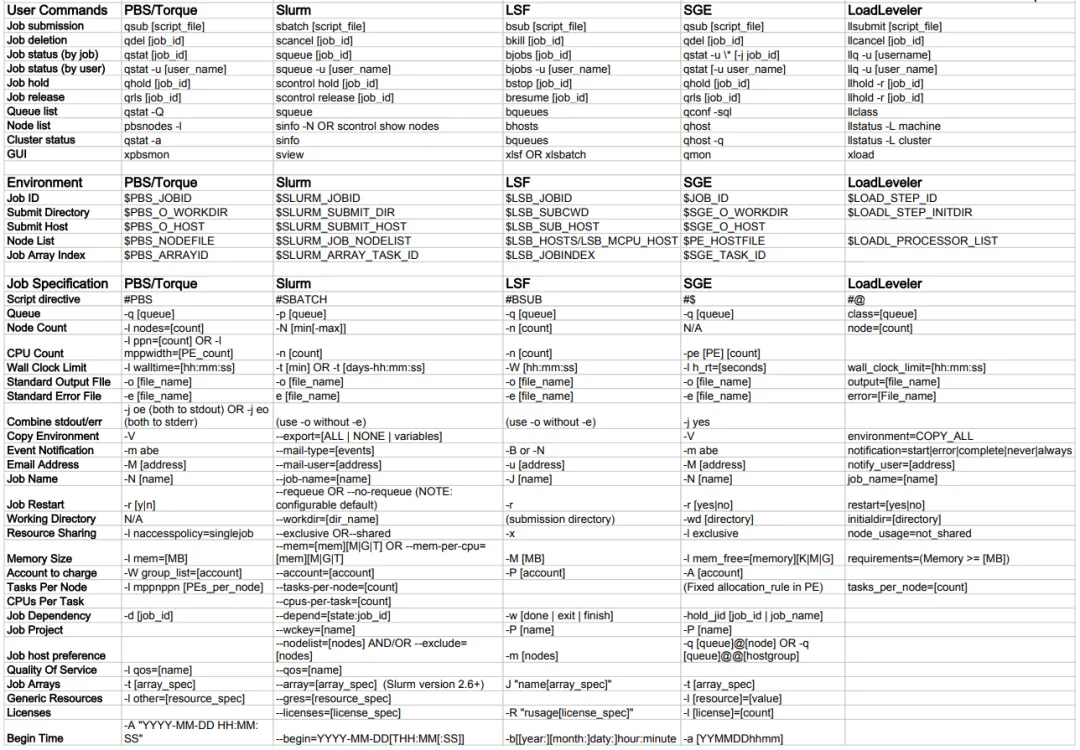

The following table lists the commonly used HPC job scheduling management systems: PBS/Torque, Slurm, LSF, SGE, and LoadLeveler commands, environment variables, and job specification options, each of these job scheduling managers has unique features, but the most common features are available in all of these environments listed in the table.

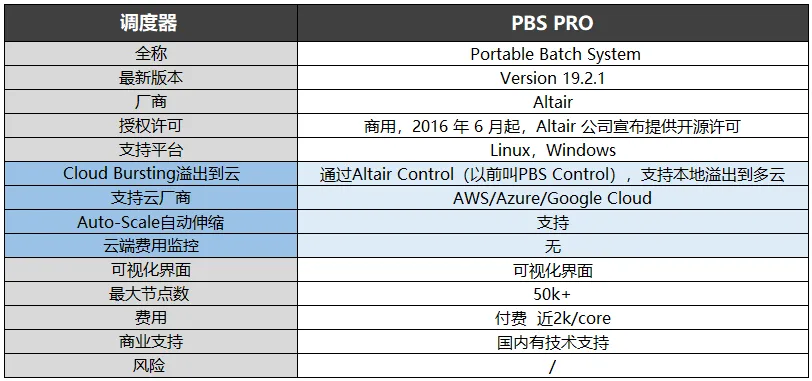

The most important module in the cluster management system is the job scheduling system. Currently, the mainstream job scheduling systems are implemented based on PBS. PBS(Portable Batch System) was originally developed by NASA's Ames Research Center, mainly to provide a software package that can meet the needs of heterogeneous computing networks for flexible Batch processing, in particular, it meets the needs of high-performance computing, such as cluster systems, supercomputers, and large-scale parallel systems.

The main features of PBS are: open code, free access; Support for batch processing, interactive jobs, serial jobs, a variety of parallel jobs, such as MPI, PVM, HPF, MPL;PBS has the most complete functions, one of the oldest and most widely supported local cluster schedulers.

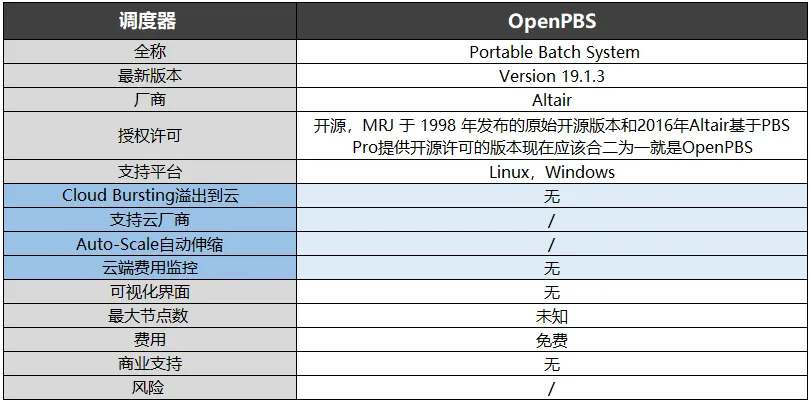

PBS currently includes openPBS, PBS Pro and Torque. Among them, OpenPBS is the earliest PBS system, and there is not much follow-up development at present. PBS pro is a commercial version of PBS with the most abundant functions. Torque is an open-source version that Clustering has received OpenPBS and provided subsequent support. PBS has the following features:

1) ease of use: provides a unified interface for all resources and is easy to configure to meet the needs of different systems. Flexible job scheduler allows different systems to adopt their own scheduling policies.

2) portability: it conforms to POSIX 1003.2 standard and can be used in various environments such as shell and batch processing.

3) adaptability: it can adapt to various management policies and provide scalable authentication and security models. Supports dynamic distribution of loads on the wide area network and virtual organizations built on multiple physical entities with different locations.

4) flexibility: supports interaction and batch processing. torque PBS controls batch jobs and scattered Compute nodes.

In 2016, Altair provided an open-source licensed version based on PBS Pro. The combination of Altair and the original open-source version released by MRJ in 1998 is roughly the current OpenPBS. Compared with Pro, it has many more restrictions, but both support Linux and Windows.

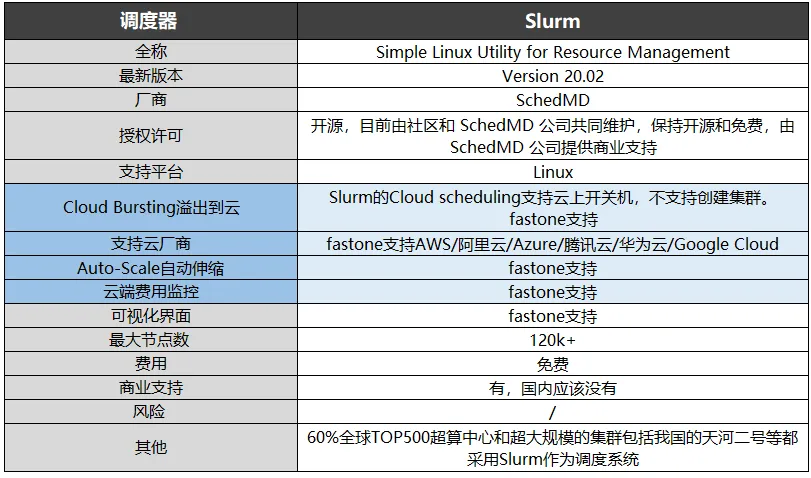

Slurm is called Simple Linux Utility for Resource Management. In the early stage, it was mainly developed by Lawrence Livermore National Laboratory, hivmd, Linux NetworX, Hewlett-Packard, and Groupe Bull. It was inspired by the closed source software Quadrics RMS. The latest version of Slurm is 22.05, which is currently jointly maintained by the community and hivmd. It is open-source and free of charge. The business support provided by hivmd is only supported for Linux systems, and the maximum number of nodes exceeds 120000. Slurm has the advantages of high fault tolerance rate, support for heterogeneous resources, and high scalability. It can submit more than 1,000 tasks per second. Because it is an open framework and highly configurable, it has more than 100 plug-ins, therefore, the applicability is quite strong.

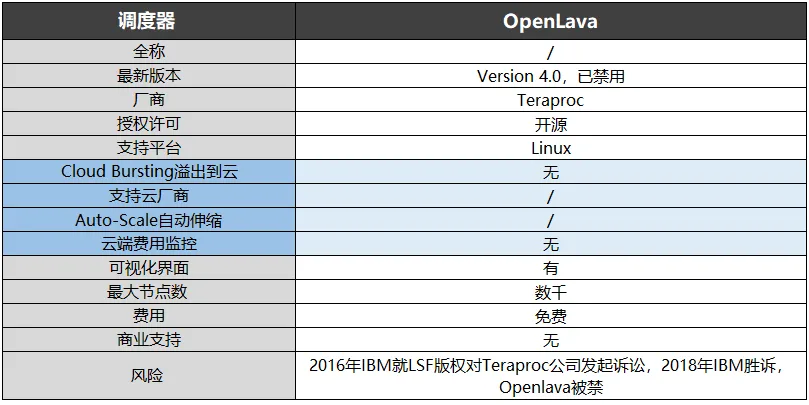

There are three main schedulers based on LSF(Load Sharing Facility): Spectrum LSF, PlatformLSF, and OpenLava. The early LSF was developed from Utopia system developed by University of Toronto. In 2007, Platform Computing opened a simplified version of Platform Lava based on the earlier and earlier versions of LSF. This open-source project was suspended in 2011 and was taken over by OpenLava. In 2011, Platform employee David Bigagli created OpenLava 1.0 based on the derived code of Platform Lava. In 2014, some Platform employees established swifproc to provide development and business support for OpenLava. In 2016, IBM filed a lawsuit against speechproc for LSF Copyright. In 2018, IBM won the lawsuit and OpenLava was banned.

In 2011, after the open source project of Platform Lava was suspended. In January 2012, IBM acquired Platform Computing. Spectrum LSF is a commercial version released after IBM's acquisition. It is currently updated to 10.1.0 and supports Linux and Windows. The maximum number of nodes exceeds 6000. It provides commercial support in China.

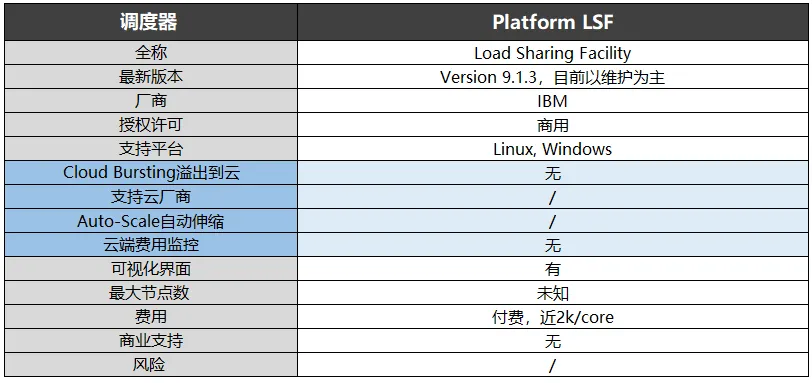

Platform LSF is an early version of LSF, which belongs to IBM like Spectrum LSF. The current version is 9.1.3. Visual inspection has stopped updating and mainly focuses on maintenance.

Among the three schedulers, only Spectrum LSF supports Auto-Scale cluster Auto-scaling. The scheduler can also overflow to the cloud through LSF resourceconnector. Cloud vendors include AWS, azure and Google Cloud.

Parallel programming model

Message Passing Interface (MPI),MPI is a parallel programming technology based on Message Passing. MPI programs are parallel programs based on message delivery. Message delivery means that each parallel process has its own independent stack and code segment, and is executed independently as multiple unrelated programs, information interaction between processes is completed by explicitly calling communication functions.

1) MPI is a library, not a language.

2) MPI is the representative of a standard or specification, not just a specific implementation of it. So far, all parallel computer manufacturers have provided support for MPI, the implementation of MPI on different parallel computers can be obtained free of charge on the Internet, and a correct MPI program can be run on all parallel computers without modification.

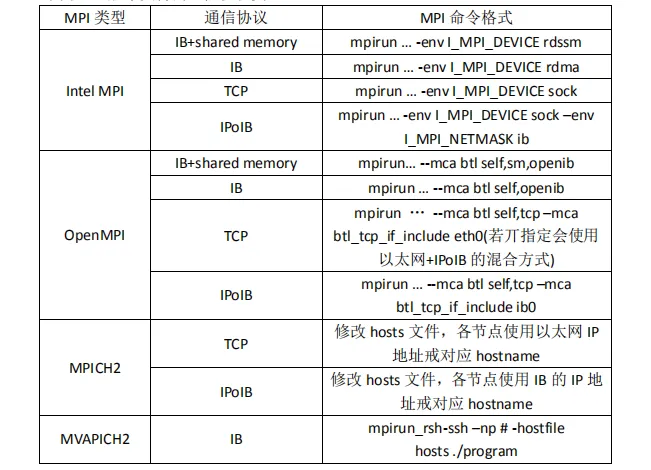

3) MPI is a message transmission programming model and represents this programming model. In fact, although the standard MPI is large, its ultimate goal is to serve the goal of inter-process communication. Common MPI types are as follows:

1) OpenMPI

OpenMPI is a high-performance messaging library, which is an open source implementation of MPI-2 standards and is developed and maintained by some scientific research institutions and enterprises. Therefore, OpenMPI can obtain professional technology, industrial technology and resource support from the high-performance community to create the best MPI library. OpenMPI provides many conveniences for system and software vendors, program developers, and researchers. It is easy to use and runs itself in a variety of operating systems, network interconnection, and a batch of/scheduling systems.

Download Address: http://www.open-mpi.org/

2) Intel MPI

Intel MPI is the MPI version supported by the Intel compiler.

3) MPICH

MPICH is one of the most important implementations of MPI standard and can be downloaded from the Internet for free. The development of MPICH and the formulation of MPI specifications are carried out simultaneously, so MPICH can best reflect the changes and development of MPI. The development of MPICH was mainly completed jointly by Argonne National Laboratory and Polytechnic State University. In this process, IBM also made its own contribution, however, the standardization of MPI specifications is completed by MPI Forum. MPICH is the most popular non-patent implementation of MPI, which is jointly developed by Argonne National Laboratory and Mississippi State University and has better portability. Download Address: http://www.mpich.org/

4) Mvapich

MVAPICH is the abbreviation of MPI of InfiniBand on the VAPI layer. It serves as a bridge between MPI and InfiniBand Software interface called VAPI, and is also an implementation of MPI standard. InfiniBand is the abbreviation of & ldquo; Infinite bandwidth & rdquo;, which is a new network architecture supporting high-performance computing systems. InfiniBand and MPI were incompatible before Peter, a scientist at OSU(Ohio State University) Supercomputer Center, developed MVAPICH in 2002.

At the same time, MVAPICH2/MVAPICH can also be obtained from the Open Fabrics Enterprise Distribution (OFED) suite. The latest version is MVAPICH2 2.2B, and the download address is http://mvapich.cse.ohio-state.edu/

this article is based on & ldquo; Summary of high-performance computing knowledge [Website: https://mp.weixin.qq.com/s/P0y-e0Cb8eSzJIlFbUFyEg]”及“OpenMP编译原理及实现[网址:https://mp.weixin.qq.com/s/P0y-e0Cb8eSzJIlFbUFyEg]”,全文及相关技术下载,如以下链接 。

analysis of high-performance computing application features [Website: https://mp.weixin.qq.com/s/P0y-e0Cb8eSzJIlFbUFyEg]

alibaba Cloud elastic high-performance computing [Website: https://mp.weixin.qq.com/s/P0y-e0Cb8eSzJIlFbUFyEg]

challenges and progress of high-performance computing in China [Website: https://mp.weixin.qq.com/s/P0y-e0Cb8eSzJIlFbUFyEg]

high-performance deep learning computing framework [Website: https://mp.weixin.qq.com/s/P0y-e0Cb8eSzJIlFbUFyEg]

supercomputer Research Report [Website: https://mp.weixin.qq.com/s/P0y-e0Cb8eSzJIlFbUFyEg]

in-depth report: GPU research framework [Website: https://mp.weixin.qq.com/s/P0y-e0Cb8eSzJIlFbUFyEg]

high-performance computing and supercomputing topics [Website: https://mp.weixin.qq.com/s/P0y-e0Cb8eSzJIlFbUFyEg]

the article is reprinted from: architect Technology Alliance

Reprint

Reprint