The recent NVIDIA GTC 2024 conference has emerged as the most influential event in the IT industry. With over 17,000 attendees, its scale even surpassed that of Steve Jobs' legendary Apple keynotes. At the conference, NVIDIA unveiled new chips, software, and solutions, showcasing its absolute dominance and immense ambitions in the AI era.

In fact, Jensen Huang, the CEO of NVIDIA, has made it clear that the company's ambitions extend far beyond GPUs. With more than 80% of the GPU market share, NVIDIA is now aiming to dominate the data center market of over US$1 trillion by offering comprehensive data center solutions.

However, despite NVIDIA's unrivaled strength, the company still chooses external controller-based (ECB) storage when building AI systems. Even as high bandwidth memory (HBM) replaces DDR memory, ECB storage remains a critical component, much like how ECB storage (from vendors like EMC and Huawei) was essential for Oracle databases during their heyday.

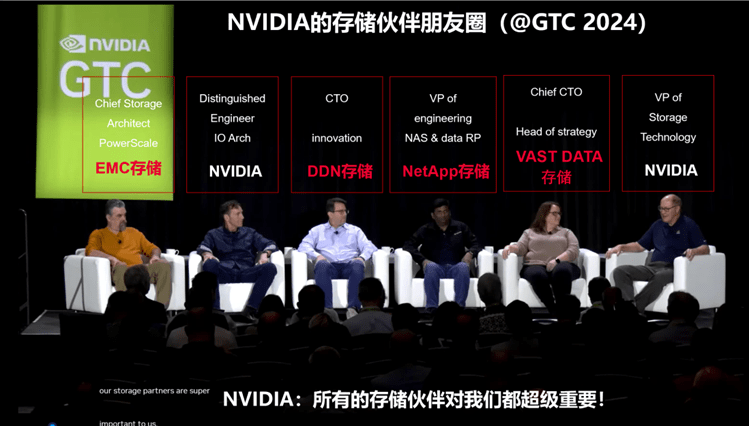

Note: During the 2024 NVIDIA GTC conference, a panel discussion on storage featured top industry vendors and major NVIDIA's customers. The discussion focused on how storage solutions can address a series of challenges posed by the AI era.

Why is storage so important to AI, and why does NVIDIA rely on external storage vendors? To answer this, we must peel back the layers and reveal the underlying truth.

1. NVIDIA's ambitions extend beyond merely selling chips; it aims to sell data center solutions. GPU+CUDA is the core of NVIDIA's full-stack AI data center architecture that always includes ECB storage as a standard component.

Compute, storage, and network are three core components in the data center IT stack. On the compute side, NVIDIA is accelerating the replacement of CPUs with its GPUs and DPUs. On the network side, it is advancing the adoption of InfiniBand through its acquisition of Mellanox. However, when it comes to storage, NVIDIA has chosen to collaborate extensively with leading storage vendors such as Dell EMC, NetApp, Pure Storage, DDN, IBM, Vast Data, and WEKA. Across NVIDIA's solutions from OVX (data center inference) to DGX BasePOD (enterprise AI training clusters), DGX SuperPOD (large-scale training clusters), and DGX supercomputers (ultra-large-scale training clusters)—one constant is the integration of ECB storage as a standard configuration.

2. Why is ECB storage a must-have in NVIDIA's architecture?

1) Traditional applications generate and save vast amounts of new data. AI applications differ from traditional ones as they generate minimal new data. Instead, they consume existing data to produce well-trained models and tokens.

2) There is no AI without sufficient data. The quality of data determines how far AI can evolve. But how is the massive amount of high-quality data obtained? The most common method is to obtain a large amount of public Internet data as the foundational input. Then the key point is to combine this with an enterprise's internal data, including core production data and historical archives.

3) Currently, over 80% of enterprise data still resides in data centers, with only a small fraction at the edge or in the cloud. In these data centers, ECB storage is the backbone of enterprise data. Connecting NVIDIA's AI systems to this type of storage ensures a quick and efficient data loop, which is essential for training large AI models. Furthermore, when these models are deployed in real-world applications (such as RAG inference), they must connect directly to production storage to access live production data. Thus, whether in the training phase of large AI models or the inference phase of industrial applications, AI systems must be equipped with ECB storage to fuel AI models with enterprise data, especially production data.

4) The rapid advancement of large AI models has led to a 10,000-fold increase in parameters and data volume. Quickly loading and preparing exabyte-level data for training large AI models has become a key determinant of efficiency. Additionally, the speed of saving and loading checkpoints also significantly impacts training efficiency, as checkpoints are repeatedly saved during training to allow for retraining in the event of failures or unsatisfactory training results. Designed for data storage and access, ECB storage is essential for AI training. Even minor performance optimizations can significantly enhance the training efficiency of AI systems, which is why NVIDIA opts for ECB storage.

5) In the context of industrial application inference, data pipelines become essential for handling numerous scenarios and emerging AI applications. Mainstream storage vendors provide data flow, unified management, and data acceleration across edges, data centers, and clouds. This simplifies data access and processing for AI applications while also making the deployment and operation of large AI model applications simpler and more efficient.

3. Why does NVIDIA choose to partner with mainstream storage vendors rather than develop storage solutions in-house or through acquisition?

1) In the IT industry, no single company can fulfill all customer requirements. An IT company thrives on layered collaboration rather than closed, all-in-one solutions. Although targeting the entire AI data center market, NVIDIA positions itself as a computing platform company. By defining the system architecture and embracing open collaboration, NVIDIA can meet diverse customer needs across different phases and partner with other companies to construct future-proof data centers.

2) If NVIDIA were to develop its own storage products, these would neither replace the customers' existing production storage nor effectively utilize production data. By partnering with ECB storage vendors, NVIDIA integrates its computing platforms with existing enterprise storage systems, which is a practical approach to reinforce its dominance.

3)Traditional storage vendors have served enterprise and carrier customers for two to three decades, and have a substantial customer base and inventory data. NVIDIA cooperates with these traditional CPU-centric storage vendors to align them with its ecosystem. By creating joint solutions, NVIDIA enables its hardware and software to be sold alongside storage vendors' products, thereby expanding NVIDIA's customer reach and product coverage. Additionally, these vendors possess mature enterprise-class delivery capabilities, which NVIDIA does not excel in. In summary, the cooperation creates a win-win situation for both parties.

4. Recommendations for customers in China

Storage can never be overlooked regardless of the computing platform (NVIDIA, Ascend, etc.) used by customers. ECB storage is the standard configuration of clusters in NVIDIA systems across all cluster sizes. For customers in China, it is crucial to focus on building robust storage infrastructure, regardless of the current phase of their AI clusters (planning or construction). Even a "small" investment in storage can lead to a "big" enhancement in AI system efficiency.