Wen/GE Jianzhuang, chief architect of Huawei DCS solutions

the outbreak of the AI model has brought new opportunities for the innovation of thousands of industries, and may even reconstruct the business and products of enterprises, bringing opportunities for some enterprises to overtake in corners. At the same time, there are many big model manufacturers in the industry, which have entered the stage of "hundred Model Wars". How to choose dazzling big models and how to implement big model strategy have encountered many challenges.

First, large models are difficult to choose: how do I choose a big model that suits my business needs? Mainstream in the industry NLP large models are relatively mature. Multi-modal large models are continuously developing, but the overall maturity is insufficient. Therefore, we can give priority to adopting those with relevant industry cases. NLP big Model for intelligent customer service, scenic spot Guide, policy Q & A and other scenarios based on natural language interaction, CV and voice. For scenarios where business data security is not high, demand is not strong in specific industry scenarios, and business application development is only based on big model results, you can choose big cloud models API method (for example OpenAI) develop new services. If the business data belongs to enterprise privacy data and the general-purpose big model cannot directly meet the business needs, further optimization is required in combination with the enterprise industry and private business data, in this scenario, we recommend that you deploy a large model locally within the enterprise based on the private data of the enterprise. Fine Tuning then develop AI large model applications. In scenarios where large models are deployed within an enterprise, multiple large models may need to be repeatedly compared to evaluate the available models, therefore, an open high-performance infrastructure base suitable for multiple large model deployments has become an important reference for enterprises to reduce the deployment costs and trial and error of large models. It is pre-optimized AI large model all-in-one machine becomes an important option.

Secondly, AI it is difficult to quickly deploy and maintain large models 2 challenges: good operation of large models depends on deep collaborative design and joint optimization of computing, storage, network, etc, AI there are a large number of full-stack hardware and software, forming a relatively heavy technology stack. A single point of failure will lead to business interruption or performance degradation of large models. The traditional combined or pieced-together deployment mode will lead to computing, it is difficult to optimize storage and network collaboratively, which cannot meet the requirements of enterprises for fast delivery cycle and reducing the difficulty of operation and maintenance. Pre-optimized full stack AI the big model all-in-one machine brings the possibility to solve the problem.

Thirdly, AI model tuning challenges data efficiency and storage performance. : the general big model (L0 big model) of the big model manufacturer needs to be refined according to the industry data of the enterprise on the customer site to create a big industry model (L1 big model), each business department can refine and subdivide the big model (L2 big model) of the scenario according to the further refinement of the business data of the department. Industry data and business department data need to be collected, cleaned, pre-processed, and other operations, this type of data is stored separately, processed separately, and then copied to the training Cluster. Multiple copies of the data result in a long data processing cycle, data consistency is difficult to guarantee, so data preprocessing platforms and training platforms need to be able to share data to improve data efficiency. The training process requires multi-machine and multi-card collaboration, training data needs to be shared among multiple machines, and training data is mainly small files. The storage performance of small files has a great impact on training efficiency, at the same time, AI models have a long training cycle and are easy to be interrupted. Therefore, Checkpoint is frequently used to store intermediate training status and results during training. When training is interrupted, whether it is model parameter problems or hardware faults, the shared storage Checkpoint restores training in a timely manner. Therefore, high-performance and reliable shared storage is one of the key indicators.

L2 the continuous update of the model in the inference scenario is the first 4 challenges: after the L2 model is refined, it can be quickly deployed in the end-side business scenarios with application software for reasoning. The end-side needs to improve the O & M efficiency, implement inbound-free O & M, and be able to perform operations based on the Enterprise Center. L2 the gradual optimization and flexible update of the large model, so L2 when big models and applications are deployed on the end-side business, how can they be quickly deployed and updated with the Enterprise Center L2 collaborative updating of large models has become a key challenge. It supports edge cloud collaboration and model upgrade services. 0 the big model inference integrated machine with interrupt and hardware is the best choice.

Model full-link security is the first big model to be implemented in enterprises 5 challenges: big model is the core asset of big model companies and enterprise customers. When the model of big model companies is delivered to enterprises L0 the full-link security of the model, as well as the enterprise's refined adjustment based on the big model that contains the confidential information of the Enterprise L1 and L2 the full-link security of the large model must be designed and considered in a unified manner to prevent the large model from being disorderly copied and abused. Therefore, enterprises need to design the security asset management mechanism and security management model of the large model end-to-end.

To sum up, the deployment of large models in enterprises has a long way to go. Pre-optimized, open, and prefabricated computing / storage / network / full Stack management software / big model development tool chain / large models that support smooth updating of end-side models AI full Stack all-in-one machine is the best choice.

1. Deploy a large model within an enterprise recommend an all-in-one machine model

large model training has specific requirements for network and storage. For example, parameter networks generally require 200g or 400g bandwidth network, massive small file training requires shared storage and high IOPS read and write Checkpoint also need high IOPS read/write, general storage and general network in common cloud platforms cannot meet the training requirements, so the standard cloud platform model built by enterprise regular cannot meet the deployment requirements of large models, independent deployment of the large model infrastructure is currently the optimal method. It is recommend solution to deploy and deliver the large model all-in-one machine with independent tuning and smooth scale-out as needed.

1. Deploying large models in a full-stack all-in-one machine improves delivery efficiency, reduces maintenance costs, and improves business development efficiency of large models.

Component combination construction AI the big model infrastructure solution is an optional option, but it poses many challenges.

a. Long delivery cycle and complex management: the component combination infrastructure solution requires independent procurement of computing, storage, network, cabinets, etc. The delivery cycle is long, each equipment is managed independently, the operation and maintenance are complex, and on-site assembly and deployment are required, on-site equipment networking optimization, large model and equipment coordination optimization, resulting in long delivery cycle and high delivery costs.

B. It has a great impact on the energy consumption and space planning of data centers: the equipment models are not the same, and the energy consumption is uncertain. The power supply and cooling of the data center need to be designed according to the power consumption and heat dissipation of the equipment required by the large model. The uncertainty of power consumption and space will lead to the power supply of the data center, huge waste of space design and energy consumption.

Through the full-stack all-in-one machine, the factory will conduct comprehensive optimization of computing, storage, network and Cabinet, equipment determination, computing power determination, storage capacity determination, network bandwidth determination, power consumption determination, the occupied space of the data center is determined, with high reliability assurance, unified monitoring and management of the full stack hardware, and direct evaluation of power transformation and heat dissipation in the data center, greatly accelerating the deployment and operation and maintenance efficiency of large models. The all-in-one solution solves the deployment and operation and maintenance problems of large models. Enterprises can spend more time on business innovation of large models to improve innovation efficiency.

2. All-in-one machines need to be open and compatible with multiple large models to reduce infrastructure construction costs.

Enterprises need a variety of big model capabilities in the model selection test stage and subsequent business development, and may introduce many big model manufacturers. If different types of big models purchase different infrastructures, all of them need to be optimized separately, resulting in a sharp increase in O & M and procurement costs. By summarizing the essential requirements of large models (network, storage, computing power, resource scheduling and management requirements), in-depth optimization, multiple large models can be deployed in this environment to reduce enterprise procurement costs and O & M costs.

3. Enterprises need three big model all-in-one machines to support the implementation of enterprise big models

a. Training all-in-one machine: for scenarios where data changes frequently, model accuracy improvement is continuously pursued, and training actions are frequent, the Enterprise L0 large model or already refined L1 large model, continuous training based on new data L1 or L2 for large models, a large model training all-in-one machine with powerful computing power is required to expand storage and computing power as needed. In this scenario, high-performance parameter Training Network ( 200g/400g/ higher), requires high-performance shared file storage with small file access capabilities and a large number GPU/NPU AI card.

B. Inference all-in-one machine: this scenario is most frequently used in enterprises and is the main form for large models to be implemented in enterprises. The condensed or cropped large models and AI application joint deployment to serve the end customer business. Generally, large models require multi-Card joint reasoning due to large parameters (tens of billions, hundreds of billions, etc.), so high density GPU/NPU computing power has become a key requirement. Unified intelligent and efficient scheduling of computing power and pooling of high-density computing power are the key capabilities to improve the operation efficiency of inference engine.

c. Training and pushing all-in-one machine: for scenarios that focus on reasoning but do a small amount of training periodically, you need to use the container platform to specify SLA dynamic Scheduling CPU and GPU resources, priority guarantee reasoning. In this scenario, GPU direct or VGPU in training, a large number AI computing power loss in failure, scheduling, time slice waste, space unit waste and other links, the real computing power utilization rate is not 30%, so more efficient GPU pooling technology, training and inference processes share hybrid deployment on demand, support SLA scheduling and promotion GPU resource Utilization to reduce training costs.

II. It is necessary to formulate clear safety specifications for large model training and promotion to ensure the safety of large model assets

A large model is essentially a database that stores all the trained materials. Therefore, the model that an enterprise has refined through industry data and department data includes various sensitive data of the enterprise. If it is leaked, it will cause serious consequences to the enterprise. Therefore, security management is required for the entire link of the model.

1. Training data security

training data is the core data of an enterprise. During training data collection, cleaning, and preprocessing, data is authorized, accessed, and desensitized on demand, and the spread of training data is strictly controlled.

2. Big model sharing security

after big model sharing is released, you can control how only authorized users can access and use big models to prevent them from being illegally copied, cracked, and used. In addition, attackers are prevented from tampering with the model in the model Publishing supply chain. Therefore, during the release and use of the model, it is necessary to build a secure and reliable large model sharing capability through asset integrity protection and asset encryption to ensure AI safe operation of assets

1) asset integrity protection: Perform digital signatures on the internal and external layers of published assets for frontline services. / customers verify the integrity of assets to ensure safe application of assets;

(2) asset encryption: use a white box to encrypt and decrypt tables and local keys. AI security of assets and protection from malicious theft;

at the same time L0 the connotation data of big models is generally general data. Enterprise data is generally impossible to provide training for big model manufacturers because it involves potential safety hazards. Therefore, the use of big models is usually combined within enterprises. 2 deploy in the following ways: a. Take industry data and department data as in-depth, right L0 fine tuning of large models b. Because large models tend to lag for several months from training completion to use, they can be combined L2 large model + vector database, provided by vector database L2 the latest information after the model is refined, so vector databases also need to ensure controllable access and use of data privacy.

3. Inference Data security

after the L2 big model is trained, in practical applications, the client application uses enterprise business confidential information to interact with the big model, and the uploaded information may contain sensitive business information, the inference end of the big model records such information as the interaction context. The inference information needs to be safely saved and access controlled, and regular cleaning policies are formulated to prevent the leakage of sensitive information.

4. Big model application security

large model applications can directly access large models, so the application itself is one of the key entrances to the security of large models, which can be started through secure containers and security, isolate applications in isolated domains with high security and reliability, and combine container security detection to ensure that the application itself does not become an attack Portal, thus ensuring data and model security.

Third, the training & training all-in-one machine and the Edge inference all-in-one machine cooperate to update the L2 model periodically to improve the customer experience.

Training & the training and push all-in-one machine is periodically updated based on the latest data collected by the enterprise. L2 large model to improve the accuracy and accuracy of business reasoning, L2 after the model is retrained, the new model needs to be synchronized to the edge inference all-in-one machine through the tool chain, and the Edge inference all-in-one machine can be automatically updated. / perform rolling upgrades and incremental upgrades to ensure that the big model business is not interrupted during the upgrade process.

4. Huawei FusionCube A3000 training and push all-in-one machine helps enterprises realize the final mile of big models

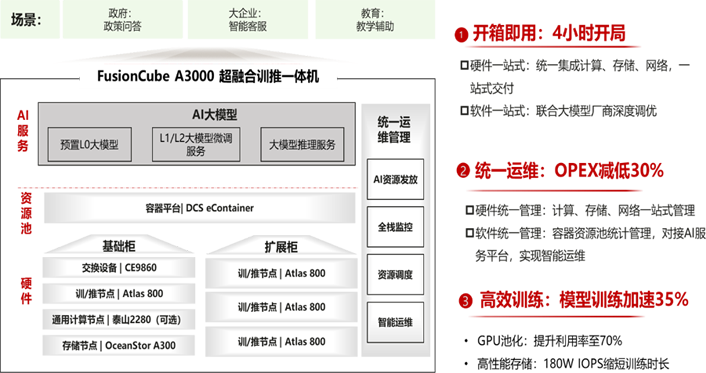

huawei all-in-one machine FusionCube A3000 it provides enterprise customers with three forms: training all-in-one machine, training and pushing all-in-one machine and reasoning all-in-one machine, and it is built in with some large model manufacturers. L0 big model and big model development tool chain, focusing on providing customers L1 and L2 large model scenarios that provide enterprises with out-of-the-box models AI platform for enterprises AI big model innovation provides a solid base.

FusionCube A3000 factory prefabricated high-performance training node server, high-performance shared storage, highest 400g of AI network equipment and integrated Cabinet, and full stack hardware through management software MetaVision unified management and monitoring, one-click problem location, minute-level fault repair.

1. High-performance training and pushing server nodes

A single server can support Huawei upgrade 8 card 910 module, FP16 computing power reached 2.5PFLOPS, can also be supported 8 card H800 avida module, FP16 computing power can reach 15.8PFLOS and can be smoothly extended 16 support for nodes 128 card, abundant computing power can meet the needs of a variety of scenarios.

2. High-performance shared storage

use standard file interfaces to support future expansion to multiple training platforms . Provides large-capacity, shared access, and the same performance as the storage capacity of local disks. . Supports remote data synchronization.

a. Support straight-through GPU( NFS over RDMA): the training data loading time is reduced by a hundred times ms level, upgrade 2~8 double the data transmission bandwidth, reducing the training server memory and GPU more efficient

b. High performance: multiple links are aggregated and client data is cached for single-stream acceleration and performance improvement. 4~6 times, link failure automatic switching in seconds; Based on OceanStor Dorado architecture FlashLink stable latency, DTOE leading enterprises in protocol uninstallation and metadata intelligent caching NAS performance to achieve sub-millisecond-level low latency

c. Efficient training: multi-level balancing technology to accelerate the performance of small file scenarios and store single node IOPS reachable 180W IOPS, the bandwidth of a single storage node can reach 50Gb/S.

3. High-performance parameter network

l high density 400GE/100GE/40GE aggregation, super-large capacity;

l The whole network is highly reliable and has no service awareness in fault scenarios.

l intelligent lossless network, meet RoCEv2 application Performance requirements

l device zero configuration deployment, automatic O & M

4. One-stop development of large model development tool chain

l one-stop data management center covering data collection, labeling, cleaning and processing AI model Development provides high quality data

l flexibly connects to multiple types of data sources, supports multi-modal data access and visual management, and supports convenient data import, export, view, version management, and permission control.

l built-in rich data preprocessing algorithms, such as image enhancement, deduplication, cropping, video uniform frame extraction and other data processing services

l use the fine-tuning development tool chain provided by the big model open service platform to train and deploy users' exclusive models on the platform with only a small amount of industry data and simple steps.

FusionCube A3000 ultra-integrated machine, full stack modular design, full cabinet delivery, out-of-the-box use, built-in AI large model, reshaping the industrial structure, helping AI applications to develop with high quality.

Reprint

Reprint