Source: Huawei data storage FusionCube hyper-converged infrastructure 2024 special issue

virtualization, according to the definition of Wikipedia, is a kind of resource management technology, which abstracts various physical resources of computers, including CPU, memory, disk space, network adapter, etc, after conversion, it is presented and can be divided and combined into one or more computer configuration environments, thus breaking the non-cutting barriers between solid structures, this enables users to apply these computer hardware resources in a better way than the original configuration.

Generally speaking, we believe that virtualization can be traced back to 1959, a computer professor at Oxford University, chris Christopher published an academic report entitled "Time Sharing in Large Fast Computer" at the International Information Processing Conference, the basic concept of virtualization was first proposed. In the professor's view, virtualization is firstly a time division Technology. By dividing several time slices, parallel processing of different applications or different threads of programs in computers is realized, while time division reuse is realized, the most important thing is to virtualize the computer's hardware resources in the dimension of time slice. The article also puts forward several big challenges of virtualization prospectively, such as Isolation, how to ensure that different processes do not interfere with each other when using computer resources; Another example, Efficiency, how to avoid hardware resource usage efficiency reduction after virtualization.

As you can see, virtualization initially served large computer systems. At that time, computing resources were very expensive and scarce. In order to make better use of computing resources and make it easier to use computing resources, virtualization came into being. 1972 year, IBM released a virtual machine ( Virtual Machine, abbreviated VM) technology, it can adjust and control resources according to users' dynamic application requirements, so as to make full use of expensive mainframe resources as much as possible, thus virtualization has entered the era of mainframe IBM System 370 the series is called virtual machine monitor ( Virtual Machine Monitor, abbreviated VMM) program generates many virtual machine instances that can run independent operating system software on the physical hardware, thus making virtual machines popular.

x86 the emergence of the architecture has broken the monopoly of mainframes and greatly reduced the use cost of computing resources. However, the single x86 the capacity of servers is limited. Generally, multiple servers must be assembled to achieve and exceed the capacity of the mainframe. How to transfer multiple x86 the combination of servers into one x86 cluster? Become x86 how do I manage resources after a cluster? Virtualization Technology once again plays a key role in defining, allocating and managing hardware resources through software. From this perspective, virtualization is similar to operating systems, if the operating system is the resource management of a computer, virtualization is the resource management of a computer cluster, so virtualization can also be considered as the operating system of the cluster.

VMware development

VMware is a well-deserved overlord of virtualization in the x86 era. It defines a virtualization industry and establishes a wide ecosystem, including many south-facing hardware manufacturers and north-facing application manufacturers, jointly Build a virtualized business empire. In 1999, VMware took the lead in launching a business virtualization software VMware Workstation that can run smoothly for x86 platform. In 2006, VMware launched the first product of server virtualization-GSX, which is designed based on the type II (dwelling Hypervisor model) dwelling virtualization model. Therefore, GSX needs to install the operating system on the host physical machine first, then VMware GSX is installed on the host as an application. VMware manages resources and operating systems through the host operating system. The biggest problem with dwelling virtualization is that it relies excessively on the host operating system. In 2009, VMware launched the device to install the device on a physical computer. This installation method is called bare metal installation. However, SMB cannot completely abandon the host operating system. Its solution is to integrate virtualization programs and operating systems, that is, he writes the virtualization main program to the Linux operating system kernel. SMB effectively solves the problem of over-reliance on the host operating system, but this architecture still has its own defects: first, because virtualization programs include Linux operating systems, therefore, non-virtualization processes in the Linux operating system will occupy some resources on the host, resulting in waste of resources. Secondly, when managing resources and virtual machines, only scripts and proxies can be used, very inconvenient.

In 2011, VMware launched ESXi, which, together with vCenter Server and other functional components, forms VMware vSphere virtualization products, which is currently the most widely used virtualization product. Similarly, ESXi is also installed on a physical computer with bare metal. His improvement is to eliminate the complicated Linux layer in the virtualization layer and only retain the VMkernel virtualization kernel to manage resources. This greatly reduces the size of the virtualization layer and also reduces the resource overhead of the virtualization layer on the physical layer. The second biggest improvement made by ESXi is to remove the console from the virtualization program and turn it into an independent component, namely Vsphere Client, which makes management easier and more convenient. The ESXi architecture runs independently of a common operating system, simplifying the management of virtualization management programs and improving security.

EXSi has basically established the dominant position of VMware virtualization, and ESXi-based vSphere has supported VMware's rapid growth for ten years. Development of XEN another virtualization genre comes from the open-source XEN, which was originally an open-source research project of Xensource in Cambridge University. The first version of XEN 10 was released in September 2003. Xen was acquired by Citrix in 2007, and become part of Citrix desktop virtualization capabilities.

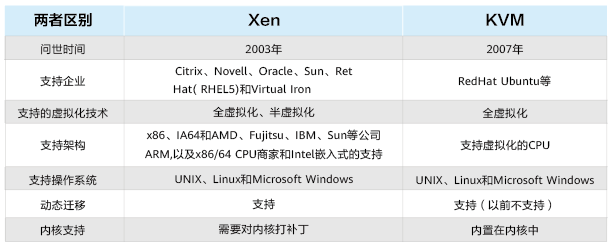

Xen is a typical representative of semi-virtualization (Para-Virtualization) technology. The so-called semi-Virtualization means that the Hypervisor does not simulate I/O devices, but only simulates CPU and memory, A dedicated management VM is responsible for virtualization of all input and output devices on the entire hardware platform. The biggest advantage of semi-virtualization is that it allows virtual machines to directly perceive the Hypervisor without simulating virtual hardware, thus achieving high performance. Xen supports a wider range of CPU architectures compared to VMware SMB/ESXi. The former only supports the X86/X86_64 CPU architecture of CISC, and Xen also supports RISC CPU architectures, such as IA64 and ARM. The disadvantage of XEN is that it requires the operating system of a specific kernel. XEN needs to modify the operating system kernel. Windows operating system cannot be supported by Xen's semi-virtualization due to its closeness.

The open-source XEN virtualization greatly lowers the technical threshold of virtualization, enabling more enterprises to participate in the research and development of virtualization technology, thus starting China's virtualization industry. In 2013, Huawei released the first XEN-based virtualization version FusionCompute3.0, which became the originator of virtualization in China.

RedHat was unwilling to miss the feast of virtualization. In October, 2006, KVM(Kernel-based Virtual Machine) appeared in the email list of Linux Kernel. In 2008, qumranet, an Israeli company that originally created KVM, was acquired by RedHat. KVM officially became part of the Linux kernel and remained open source in the Linux community.

KVM is a virtualization solution based on hardware-assisted virtualization technology (Intel's VT-x or AMD-V). Guest OS can run directly in KVM's virtual machines without modification, each virtual machine can have independent virtual hardware resources, such as Nic, disk, GPU graphics adapter, etc.

Linux native support for KVM is a fatal blow to XEN, because it is the most convenient for Linux users to use the native virtualization capability of the operating system. In addition, Citrix's strategy has shifted to desktop virtualization, investment in the XEN community has dropped significantly. In this round of technology competition, XEN is gradually at a disadvantage. Most domestic manufacturers have also switched from XEN architecture to KVM architecture. Huawei launched a virtual version of FusionCompute 6.3 based on KVM in 2018. Other vendors, such as H3C and SXF, are also heading for the KVM architecture.

It should be noted that China's virtualization industry has not stopped at the commercialization of open-source versions, especially Huawei, which has gradually launched domestic self-developed virtualization software through years of technology accumulation and commercial tests, and it has surpassed VMware in China's virtualization market.

Reprint

Reprint