Wen/Chen Mo, minister of data management product department of Huawei

recently, there is a hot post on the internet ----- virtualization, the end of an era, which has the meaning of "heaven is dead, yellow sky stands, age is in jiazi, the world is prosperous. After careful calculation, IBM's epoch-making launch of mainframe-based virtualization in the 1960 s has almost become a nail today. Is it true that virtualization is dead and the Yellow Sky is on the rise? Let's try to uncover the lies and truth of virtualization through layers of fog.

Lie 1: The data center is fully on the cloud, and virtualization is on the edge.

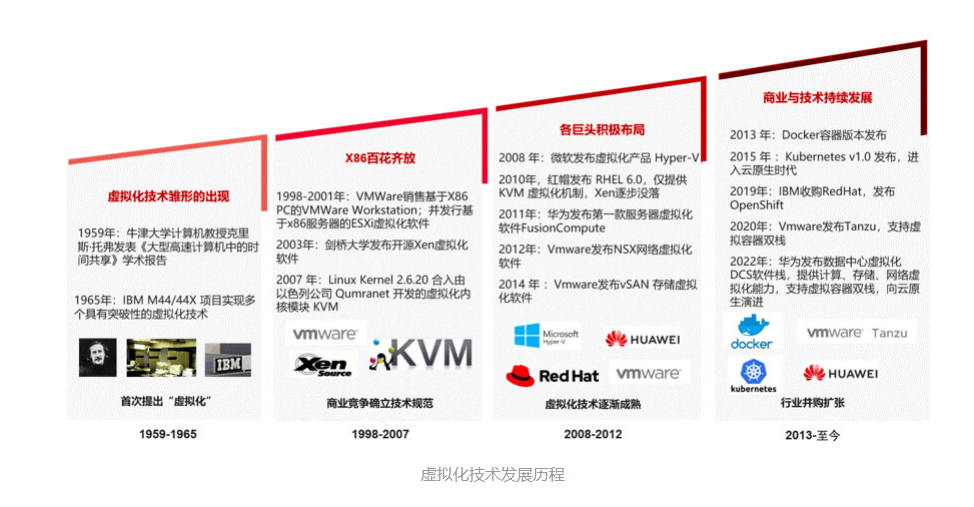

To understand this problem, we must first review the technical connotation and development process of virtualization. Generally speaking, virtualization consists of three parts: computing virtualization, storage virtualization, and network virtualization. Among them, the development history of computing virtualization is the longest, which can be traced back to 1959. He was a computer professor at oxford university, chris toffer published an academic report entitled "Time Sharing in Large Fast Computer" at the International Information Processing Conference, he first proposed the basic concept of "virtualization" in this article, which is often considered as the originator of cloud technology. Storage Virtualization it appeared late. In 1987, the RAID(Redundant Array of Independent Disk) technology proposed by the university of california, berkeley, combining multiple hard disks into a virtual single large-capacity disk through hardware or software can be considered as the origin of storage virtualization. Network Virtualization it is completely to cope with the rapid development of cloud computing. When the cluster scale reaches a certain level, the traditional Network technology is not flexible enough and the management is complex. SDN(Software Defined Network) comes into being. From this, we can see that virtualization is the basic technology of the cloud from beginning to end. Without good virtualization, there is no good cloud infrastructure.

What is the difference between a virtualized data center and a cloud? First, different business perspectives, virtualization provides resource management and o & m capabilities from the perspective of administrators. Administrators distribute services and emphasize centralized management and control. Cloud applies for resources and deploys services from the perspective of tenants, it emphasizes decentralized management and does not interfere with each other. Secondly, different business models, virtualization serves internal operations and reduces the operating costs of enterprise IT systems by coordinating resources and automatically O & M. Cloud serves a large number of elastic external tenants. Through resource sharing, auto Scaling and billing are used to realize commercial realization of IT resources. Finally, the customer pain points solved are different, the essence of virtualization is to improve the efficiency of IT resources and provide the capability of Iaas layer. Cloud pays more attention to the difficulties of customers in digital transformation by introducing big data, artificial intelligence, new technologies such as blockchain are provided to customers in the form of Paas and Saas services.

And we also see that with the deepening of the digital transformation of government and enterprise, new requirements have been put forward for virtualization providers, including tenant management, service transformation, multi-cloud management, etc. Leading manufacturers such as VMware, nutanix has also launched solutions that support multi-rent service and hybrid cloud. For government and enterprise customers, you can choose to build a virtualized resource pool first, and gradually add capabilities according to business development, which is a construction mode of addition; You can also choose to fully migrate to the cloud, and then according to your own needs, the unnecessary ability of cropping is a construction mode of subtraction. In essence, virtualization and cloud are both construction modes of IT infrastructure, with different scenarios. They will coexist for a long time and jointly serve the digital transformation of government and enterprise.

Truth 1: virtualization technology is the cornerstone of the cloud. Virtualized data centers and clouds have their own scenarios and will coexist for a long time.

Lie 2: virtualization is a backward technology that will be replaced by containers.

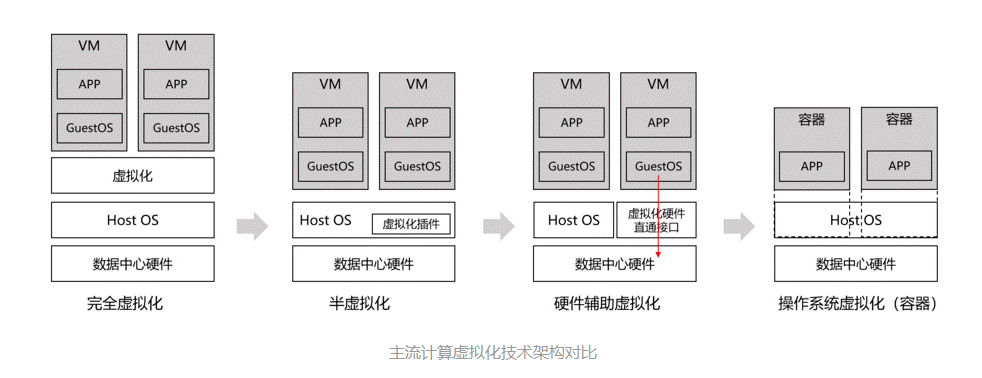

Virtualization, or computing virtualization, has made great progress in the x86 era. The earliest appearance is fully virtualized, that is, on the basis of HostOS, a HyperVisor layer is added to simulate the complete underlying hardware, including CPU, memory, clock, peripherals, etc. with pure software VMM. In this way, GuestOS and its applications, it can run on a virtual machine without any adaptation. The problem is that all instructions need software conversion, VMM design will be more complex, and the overall performance of the system will be affected.

To solve performance problems semi-virtualization, semi-virtualization is a technology that directly interacts with VMM by modifying the code of some access privilege states of Guest OS. Some hardware interfaces are provided to Guest OS in the form of software. This can improve the performance of virtual machines. The problem is that GuestOS must be adapted. Even applications running in virtual machines need to be modified and cannot run directly on HostOS.

Therefore, hardware-assisted virtualization technology developed, this Technology direction is strongly advocated by chip manufacturers. Intel-VT(Intel Virtualization Technology) and AMD-V are two hardware-assisted Virtualization technologies currently available on x86 platforms, huawei's Ni Peng-V is a hardware-assisted virtualization based on ARM technology. The biggest advantage of hardware-assisted virtualization is that the CPU provides virtual instructions without the need for VMM to capture and convert, which can greatly improve the virtualization performance and at the same time, it can isolate hardware differences through full virtualization, the advantage that GuestOS and applications do not need to be adapted is currently the mainstream of virtualization.

However, virtualization is still a two-tier architecture of GuestOS and HostOS, which is relatively heavy and cannot cope with agile services that require rapid release and deployment, especially internet services. Therefore, operating system virtualization, container technology came into being. Operating system virtualization is a lightweight virtualization technology used in server operating systems without VMM layer. The kernel creates multiple virtual operating system instances (cores and libraries) to isolate different processes (containers). Processes in different instances do not know the existence of each other at all.

Container Technology, that is, operating system virtualization, eliminates GuestOS, and the entire system stack is more flat, so it is lighter and more efficient. Therefore, some people have suggested whether virtualization should completely move towards containers?

This view is not absolute. First of all, the background of container technology is the Internet business, it requires fast iteration and fast release. It is a typical sensitive service. However, a large number of traditional services pay more attention to runtime stability, reliability, and security. It is a steady-state service and has more advantages in virtualization. Secondly, currently, most businesses have not been transformed into containers, in addition to the more complex architecture, many traditional businesses that have been transformed into containers do not see much customer value. In a certain period of time, there is no need to change the course. From virtualization to containers, there is a high learning cost. Most IT staff in enterprises, the most important thing is to ensure the stable operation of enterprise business. According to the development of enterprise business and the needs of scenarios, choose traditional virtualization or container technology, and now mainstream technology providers, such as VMware and OpenShift, provide virtual machines and container dual stack technology, allowing customers to choose freely, there is absolutely no need for technical anxiety.

Truth 2: containers are also a category of virtualization technology. The application scenarios of the two are different, so there is no need for technical anxiety.

Lie 3: virtualization is only suitable for general-purpose applications and cannot support critical applications.

This misreading comes from the stereotype of virtualization-virtualization requires instruction conversion, high resource consumption, and potential performance and reliability, which cannot support key applications. Admittedly, in the past, virtualization mostly carried some applications with relatively low performance and stability requirements, such as desktop clouds, office oa systems, and enterprise websites. However, with the development of virtualization technology, hardware-assisted virtualization as mentioned above has solved the performance loss from application to CPU. The NoF standard protocol led by Intel and Huawei, the SPDK implements Cross-layer direct access, further solves the data access performance between applications and external storage, enables virtualization to combine highly reliable and high-performance external storage, and provides a deterministic SLA, more and more key applications, such as Enterprise design platform, securities trading system and hospital HIS system, begin to be deployed on virtual machines.

Virtualization has the advantages of high resource utilization and easy sharing. It also focuses on improving performance and reliability, further expanding the application scenarios of virtualization and showing vigorous vitality.

Truth 3: virtualization improves performance and reliability, and gradually moves towards key applications on the basis of supporting common applications.

Lie 4: virtualization is pure software and has nothing to do with hardware.

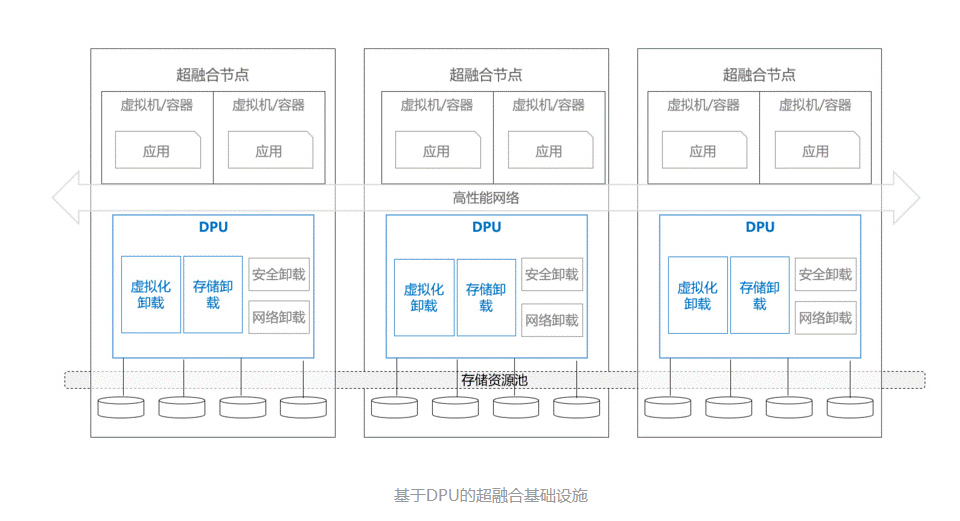

As mentioned above, hardware-assisted virtualization is enough to show that virtualization is not only software, but also the collaboration and complementarity between software and hardware. We should also see hyper-integration, which is a very important application scenario of virtualization. We define hyper-integration hardware through virtualization software and deploy it in a one-stop way that integrates software and hardware, it has gradually become the mainstream deployment method of virtualization.

Currently, there is an obvious trend of hyper-Fusion, which is to move towards dedicated hyper-fusion hardware. Cisco's HyperFLex defines four types of hyper-converged nodes, including UCS series hardware for simple computing and hybrid nodes for memory and computing equilibrium, as well as all-flash NVMe nodes for high-performance scenarios and edge-oriented nodes. Nimble and EMC VxRail of HPE also define a series of hyper-fusion exclusive hardware. It is also worth noting that DPU card, as a recent hot spot -- DPU allows hyper-fusion to give full play to the advantages of dedicated hardware in a combinable way. DPU is a data processing unit. Its goal is to free CPU from monotonous and repetitive data processing. It is the data center of hyper-converged architecture: the combination of DPU and disk frame, unmount the storage virtualization capability to DPU to achieve completely CPU-free storage nodes; Combine DPU with CPU to uninstall the computing virtualization capability to achieve completely disk-free computing nodes; DPU and DPU can interconnect and flow data by uninstalling network virtualization, so that each CPU can access the required data just like accessing a local disk, eliminates the original cross-node data bottleneck. VMware's Monterey project has been dedicated to incubating DPU technology. In the latest VxRail8.0, EXSi has been automatically deployed in DPU to uninstall the virtualization layer, provides higher performance for the entire hyper-fusion system.

Therefore, when we talk about virtualization technology, we should not only think about software, but also focus on the collaboration and complementation between virtualization software and hardware, as well as the infrastructure capabilities of the full stack, virtualization is essentially an adhesive for data center software and hardware.

Truth 4: Virtualization not only focuses on software, but also on hardware and full stack capability. Virtualization is the adhesive of data center software and hardware.

Lie 5: virtualization can only support small-scale data centers and cannot be applied to medium and large data centers.

It is true that virtualization is a mainstream choice for small-scale data centers, but it does not mean that virtualization cannot be applied to large data centers.

In terms of technical architecture, data center virtualization consists of four parts: computing virtualization, storage virtualization, network virtualization, and O & M management platform. Among them, the scale of computing virtualization and storage virtualization support depends on the software capabilities of manufacturers. Currently, it is not uncommon for mainstream manufacturers to use distributed architectures to provide support for thousands of nodes. The reason why VMware's vSAN is limited to 64 nodes is more a commercial consideration than a technical constraint. Network Virtualization (SDN) is created by large data centers. It is difficult for small and medium-sized data centers to apply SDN capabilities.

The only bottleneck lies in the O & M Management Platform. For large data centers, in addition to common equipment management and routine O & M, service provision is more important, with the development of the O & M management platform to a private cloud management platform that takes both the administrator and tenant perspectives, these capabilities can also be solved. Therefore, it is not a technical problem to support large and medium data centers in virtualization.

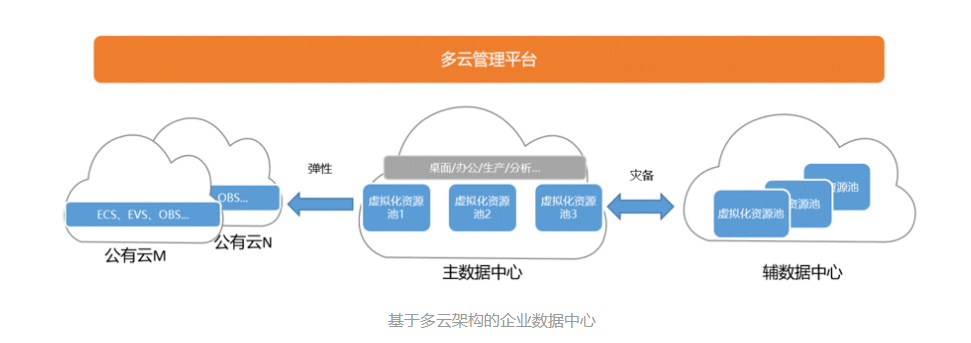

In fact, many virtualized resource pools have been distributed in medium and large data centers, such as desktop cloud resource pools, office resource pools, and video processing resource pools, in the past, these resource pools were considered as small chimneys one by one, and they must be integrated into the future with clouds. However, with the emergence of multi-cloud technologies, more and more enterprises have realized that using the same cloud, this will inevitably lead to a series of problems such as device vendor binding, homogeneous competition, and data ownership. We will begin to consider introducing multi-cloud-both self-owned cloud and public cloud, as well as extensive Virtualization resource pools, use a multi-cloud management platform for unified management to take the initiative in IT infrastructure. From a multi-cloud perspective, a virtualized resource pool is a part of a data center. Customers can freely choose and combine resources based on business requirements, vendor capabilities, and business conditions.

Truth 5: virtualization will occupy a place in medium and large data centers for a long time.

There are too many misunderstandings and misunderstandings about virtualization, but when we return to the technology itself, we will find that it is still a technology direction that develops rapidly and generates breakthroughs. Returning to the business logic, we will find that he is still the real demand and practical choice of our customers. I don't know the true face of Lushan Mountain, but I am in this mountain. The fact is that virtualization is already everywhere. IT is not only a solid foundation for the public cloud, but also a reliable collaboration among IT customers in enterprises, so that people ignore its existence and its root technology in the data center, ignoring that he has always acted as the adhesive between the software and hardware of the data center, ignoring that he is the first kilometer that needs to be consolidated for the digital transformation of enterprises, which requires our long-term research, accumulation and development.

Disclaimer: the content and opinions of the article only represent the author's own views, for readers' reference of ideological collision and technical exchange, and are not used as the official basis for Huawei's products and technologies. For more information about Huawei's products and technologies, visit the product and technology introduction page or consult Huawei's personnel.

Source: https://e.huawei.com/cn/blogs/storage/2023/uncover-the-top-five-lies

Reprint

Reprint