Source: This article is from the white paper on dedicated data processor (DPU) Technology, Institute of Computing Technology, Chinese Academy of Sciences, Chu Guihai, etc ".

1. What is DPU

DPU(Data Processing Unit) is a dedicated Data-centric processor that uses software-defined technical routes to support the virtualization of infrastructure resources, storage, security, infrastructure services such as service quality management. The DPU product strategy released by NVIDIA in 2020 positioned it as the "third main chip" of the data center after CPU and GPU, setting off a wave of industry boom. The emergence of DPU is a phased sign of heterogeneous computing. Similar to the development of GPU, DPU is another typical case of application-driven architecture design. However, unlike GPU, DPU is facing more underlying applications. The core problem to be solved by DPU is the "cost reduction and efficiency improvement" of the infrastructure, that is, the load of "low CPU processing efficiency, GPU can not handle" is unloaded to the dedicated DPU to improve the efficiency of the entire computing system, reduce the total cost of ownership (TCO) of the entire system. The emergence of DPU may be another milestone in the development of the system structure towards specialization.

Explanation of "D" in DPU

there are three explanations for "D" in DPU:

(1)Data Processing Unit, namely Data processor. This explanation puts "data" at the core position, which is different from the "signal" corresponding to communication-related processors such as signal processors and baseband processors, as well as the graphic and image data corresponding to GPU, the "data" here mainly refers to all kinds of information after digitization, especially all kinds of time-series and structured data, such as large structured tables, data packets in network streams, and massive texts. DPU is a dedicated engine for processing such data.

(2)Datacenter Processing Unit, namely the data center processor. This explanation regards the data center as the application scenario of DPU, especially with the rise of WSC(Warehouse-scale Computer), data centers of different sizes have become the core infrastructure of IT. Currently, DPU is indeed promising in data centers. However, the computing center consists of three parts: computing, network, and storage. The computing part is dominated by CPU and is assisted by GPU. The network part is router and switch, the storage part is a RAID system composed of high-density disks and a non-volatile storage system represented by SSD. Chips that play the role of data Processing in computing and network can be called Datacenter Processing Unit, so this statement is relatively one-sided.

(3)Data-centric Processing Unit, that is, Data-centric processor. Data-centric (Data-centric) is a concept of processor design. Compared with Control-centric (Control-centric), it is Control-centric. The classical von Neumann system structure is a typical control-centered structure. In von Neumann's classical calculation model, there are controllers, calculators, memories, inputs and outputs, the performance in the instruction system is a series of very complex conditional jump and addressing instructions. The concept of Data-centric comes down in one continuous line with Data Flow computing, which is a method to realize efficient computing. At the same time, we are trying to break all kinds of technical routes such as Near-Memory computing, Inmemory computing, and integration of memory and computing on the Memory Wall, it also conforms to the data-centric design concept.

The above three explanations about "D" reflect the characteristics of DPU from different angles and have certain merits. The author thinks that the connotation of DPU can be understood as different three dimensions.

Functions of DPU

the most direct function of DPU is to act as a CPU unloading engine, take over infrastructure services such as network virtualization and hardware resource pooling, and release CPU computing power to upper-layer applications. Take network protocol processing as an example. To process a 10g network at a line speed, it requires about 4 Xeon CPU cores, that is, to process network data packets, it can take up half of the computing power of an 8-core high-end CPU. If 40g or 100G high-speed networks are considered, the performance overhead is even more difficult to bear. A m a z o n calls these overhead "Datacenter Tax", that is, the computing resources that are occupied when the business program is not running and the network data is first connected. The AWS Nitro product family aims to unload all data center overhead (providing virtual machines with remote resources, encryption and decryption, fault tracking, security policies, and other service programs) from the CPU to the Nitro accelerator card, will release 30% of the computing power originally used to pay "Tax" to upper-layer applications!

DPU can become a new data gateway to raise security and privacy to a new level. In the network environment, the network interface is an ideal privacy boundary, but encryption and decryption algorithms have high overhead, such as the asymmetric encryption algorithm SM2, hash algorithm SM3 and symmetric block cipher algorithm SM4. If the CPU is used for processing, only a small amount of data can be encrypted. In the future, with the gradual maturity of the business carried by the blockchain, running the consensus algorithm POW and signature verification will also consume a large amount of CPU computing power. These can be achieved by solidifying them in DPU, and even DPU will become a trusted root.

DPU can also be used as a storage portal to localize distributed storage and remote access. As the cost performance of SSD is gradually acceptable, it has become possible to migrate some storage to SSD devices. The traditional SATA protocol for mechanical hard disks is not applicable to SSD storage. Therefore, connecting an SSD to the system through a local PCIe or high-speed network is a required technical route. NVMe(Non Volatile Memory Express) is a standard high-speed interface protocol used to access SSD storage. PCIe can be used as the underlying transmission protocol to give full play to the bandwidth advantage of SSD. In a distributed system, the NVMe over Fabrics(NVMe-oF) protocol can be extended to InfiniBand, Ethernet, or Fibre channel nodes, RDMA is used to share and remotely access storage. These new protocol processing can be integrated into DPU to achieve transparent CPU processing. Furthermore, DPU will probably assume the role of various Interconnection Protocol controllers, reaching a better balance point in terms of flexibility and performance.

DPU will become a sandbox for algorithm acceleration and the most flexible accelerator carrier. DPU is not completely a solidified ASIC. Under the foreshadowing of data consistency access protocols such as CPU, GPU and DPU advocated by standard organizations such as CXL and CCIX, DPU will further eliminate DPU programming obstacles, combined with programmable devices such as FPGA, customizable hardware will have more space to play, "software hardware" will become the norm, and the potential of heterogeneous computing will be fully developed due to the popularity of various DPU. In the fields where "Killer Application" appears, there may be corresponding DPU, such as traditional database applications such as OLAP, OLTP, 5G edge computing, intelligent driving V2X, etc.

2. Development background of DPU

the emergence of DPU is another stage sign of heterogeneous computing. The slowdown of Moore's law causes the marginal cost of general-purpose CPU performance growth to rise rapidly. Data show that the annual growth of CPU performance (after area normalization) is only about 3% 1, however, the demand for computing is explosive, which is an important background factor for the development of almost all dedicated computing chips. Taking AI chips as an example, the emergence of the latest ultra-large models with hundreds of billions of parameters such as GPT-3 has pushed the computing power demand to a new height. DPU is no exception. With the opening of the strategic curtain of "new infrastructure" represented by new infrastructure such as information network in China in 2019, the construction of 5G and gigabit optical fiber networks has developed rapidly. Mobile Internet, industrial internet, internet of vehicles and other fields are developing with each passing day. Rapid expansion of infrastructure such as cloud computing, data centers, and intelligent computing centers. Network bandwidth is developing from mainstream 10G to 25g, 40g, 100g, 200g or even 400g. The sharp increase in network bandwidth and connections makes the data path wider and denser, which directly exposes the computing nodes in the end, edge, and cloud to the sharp increase in data volume, however, the CPU performance growth rate and the data volume growth rate have a significant "scissors difference" phenomenon. Therefore, it has become a consensus in the industry to seek more efficient computing chips. DPU chips are proposed under such a trend.

Bandwidth performance ratio (RBP) imbalance

the contradiction between the slowing down of Moore's law and the explosion of global data volume, which is rapidly intensifying, is usually taken as the background of processor specialization. Although the so-called Moore's law of silicon has obviously slowed down, but "Moore's law of data" has come. IDC data shows that the average annual compound growth rate of global data volume in the past 10 years is close to 50%, and further predicts that the demand for computing power will double every four months. Therefore, it is necessary to find new computing chips that can bring faster computing power growth than general-purpose processors. Therefore, DPU came into being. Although this background has certain rationality, it is still too vague and does not answer why DPU is new and what "quantitative change" leads to "qualitative change"?

Judging from the DPU architectures published by various manufacturers, although the structures are different, they all emphasize the network processing capability. From this perspective, DPU is a strong IO chip, which is also the biggest difference between DPU and CPU. The I/o performance of the CPU is mainly reflected in the high-speed Front-Side Bus (called FSB and Front-Side Bus in Intel's system). The CPU is connected to beiqiao chipset through FSB, then connect to the main memory system and other high-speed peripherals (mainly PCIe devices). Although the updated CPU weakens the function of beiqiao chip by means of integrated storage controller, the essence is unchanged. The CPU's ability to process network processing is reflected in that the NIC is connected to the link layer data frame, and then initiates a DMA interrupt response through the operating system (OS) kernel state, and calls the corresponding protocol Resolver, data transmitted over the network (although there are also technologies that directly obtain network data through polling in the user state without interrupting the kernel state, such as Intel's DPDK,Xilinx's Onload, etc, however, the purpose is to reduce the interrupt overhead, reduce the switching overhead from kernel state to user state, and does not fundamentally enhance IO performance). It can be seen that the CPU supports network IO through very indirect means, and the front-end bus bandwidth of the CPU mainly matches the bandwidth of the main memory (especially DDR), rather than the network IO bandwidth.

In comparison, the I/O bandwidth of DPU is almost equal to that of the network. For example, if the network supports 25g, then DPU must support 25g. In this sense, DPU inherits some features of Nic chips, but unlike Nic chips, DPU does not only parse data frames at the link layer, but also directly process data content, perform complex calculations. Therefore, DPU is a chip with strong computing power on the basis of supporting strong IO. In short, DPU is an IO-intensive chip. In comparison, DPU is also a compute-intensive chip.

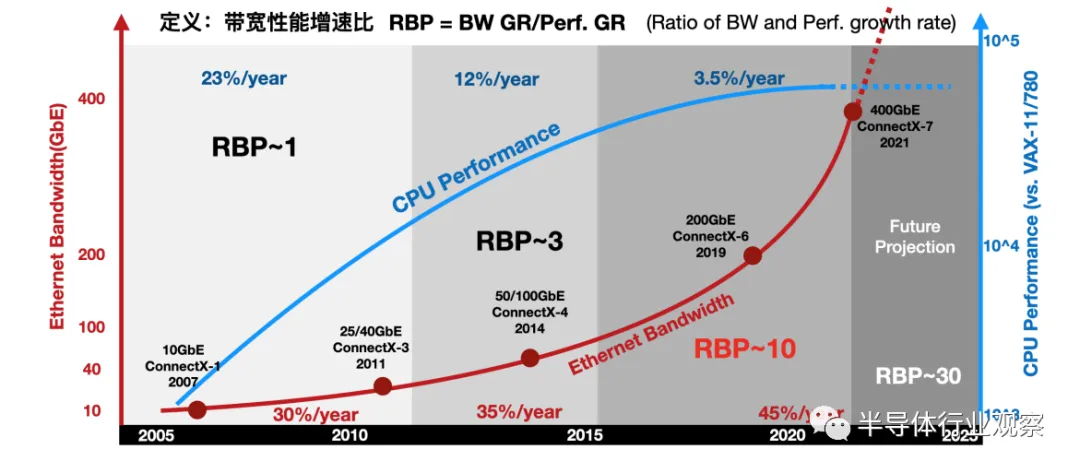

Furthermore, by comparing the growth trend of network Bandwidth with the growth trend of general CPU Performance, we can find an interesting phenomenon: RBP,Ratio of Bandwidth and Performance growth rate imbalance. RBP is defined as the increase in network bandwidth compared with the increase in CPU performance, that is, RBP = BW GR/Perf. As shown in figure 1-1, GR takes the ConnectX series nic bandwidth of Mellanox as the case of network IO and the performance of Intel series products as the case of CPU, define a new metric, bandwidth performance ratio, to reflect changes in trends.

Figure 1-1 bandwidth performance imbalance (RBP)

before 2010, the annual growth rate of network bandwidth was about 30%, and it increased slightly to 35% in 2015, and then reached 45% in recent years. Correspondingly, the CPU performance growth decreased from 23% 10 years ago to 12%, and directly decreased to 3% in recent years. During these three periods, the RBP Index rose from around 1 to 3 and exceeded 10 in recent years! If the growth rate of network bandwidth is almost the same as that of CPU performance, and the pressure of RGR ~ 1 and IO has not yet appeared, then when the current RBP reaches 10 times, the CPU can hardly directly cope with the growth of network bandwidth. The sharp increase of RBP Index in recent years may be one of the important reasons why DPU finally waited for the opportunity to "be born.

The development trend of heterogeneous computing

the direct effect of DPU is to "reduce the burden" on the CPU ". Some functions of DPU can be seen in the early TOE (orthooffloading Engine). As its name is, TOE is to "Uninstall" the CPU's TCP processing task to the NIC. Although the traditional TCP software processing method is clear, it has gradually become the bottleneck of network bandwidth and latency. The CPU usage of software processing methods also affects the performance of CPU processing other applications. The TCP offload engine (TOE) technology transfers the processing processes of TCP and IP protocols to the network interface controller for processing. While hardware acceleration is used to improve network latency and bandwidth, significantly reduces the pressure on CPU processing protocols. There are three specific optimizations: 1) isolate network interruptions, 2) reduce the amount of memory data copied, and 3) hardware protocol resolution. These three technical points have gradually developed into the three technologies of data plane computing, which are also the technical points that DPU generally needs to support. For example, the NVMe protocol replaces the interrupt policy with the polling policy to fully develop the bandwidth advantage of high-speed storage media. DPDK uses user-state calls to develop the "Kernelbypassing" mechanism, implement zero copy (Zeor-Copy); Special cores for specific applications in DPU, such as complex checksum calculation, packet format parsing, lookup table, IP Security (IPSec) support, both can be considered as hardware-based support for protocol processing. Therefore, TOE can basically be regarded as the embryonic form of DPU.

It continues the idea of TOE and unloads more computing tasks to the NIC side for processing, which promotes the development of smart Nic technology. The basic structure of a common intelligent network card uses a high-speed network card as its basic function and a high-performance FPGA chip as its computing extension to implement user-defined computing logic, to accelerate computing. However, this "Nic + FPGA" mode does not turn intelligent Nic into an absolutely mainstream computing device. Many intelligent Nic products are used as simple FPGA acceleration cards, while taking advantage of FPGA, it also inherits the limitations of all FPGA. DPU is an integration of the existing SmartNIC. It can see many shadows of the previous SmartNIC, but it is obviously higher than the positioning of any previous SmartNIC.

Amazon's AWS developed Nitro products in 2013, which put all data center overhead (providing remote resources for virtual machines, encryption and decryption, fault tracking, security policies and other service programs) on dedicated accelerators. Nitro architecture uses lightweight Hypervisor and customized hardware to separate the computing (mainly CPU and memory) and I/O (mainly network and storage) subsystems of virtual machines, through PCIe bus connection, 30% of CPU resources are saved. The core of the X-Dragon system architecture proposed by Alibaba Cloud is the MOC card, which has a wide range of external interfaces, including computing resources, storage resources, and network resources. The core of the MOC card X-Dragon SOC, which uniformly supports the virtualization of network, IO, storage and peripherals, and provides a unified resource pool for virtual machines, bare metal, and container clouds.

It can be seen that DPU has been bred for a long time in the industry. From the early network protocol processing and uninstallation to the subsequent network, storage and virtualization uninstallation, its effect is still very significant, but before that, DPU was "real and unknown", and now it is time to take a new step.

3. Development history of DPU

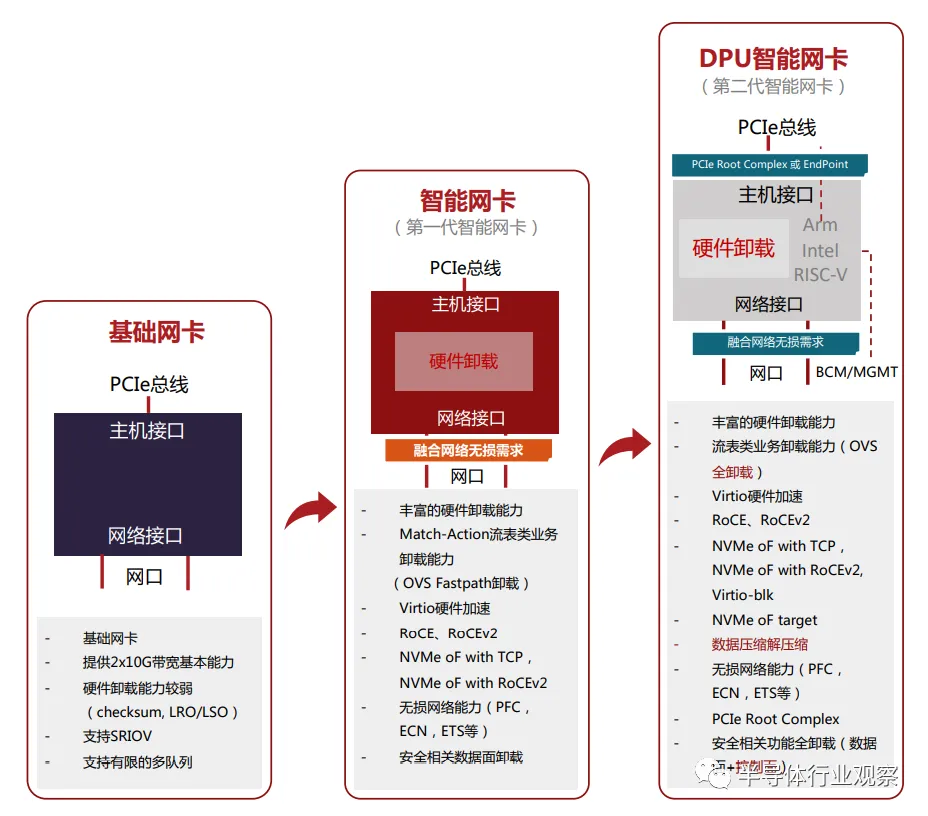

with the development of cloud platform virtualization technology, the development of intelligent network cards can be basically divided into three stages (as shown in Figure 1-2):

figure 1-2 three stages of smart card development

phase 1: Basic function NIC

basic function nic (that is, common nic) provides 2x 10g or 2x 25g bandwidth throughput, and has less hardware uninstallation capability, mainly Checksum,LRO/LSO, and supports SR-IOV, and limited multi-queue capabilities. In a virtualized network on a cloud platform, there are three main ways for the basic function network card to provide network access to a virtual machine (VM): the operating system kernel driver takes over the network card and sends it to the virtual machine (VM) distribute network traffic; The OVS-DPDK takes over the NIC and distributes network traffic to the virtual machine (VM); And provides network access to the virtual machine (VM) through SR-IOV in high-performance scenarios.

Phase 2: uninstall the NIC from the hardware

it can be considered as the first generation of intelligent network card with rich hardware uninstallation capabilities. Typical examples include OVS Fastpath hardware uninstallation, RDMA network hardware uninstallation based on RoCEv1 and rocev2, hardware uninstallation of lossless network capabilities (such as PFC,ECN,ETS, etc.) in the converged network, hardware uninstallation of NVMe-oF in the storage field, and data surface uninstallation for safe transmission. During this period, smart network cards were mainly used to uninstall data planes.

Phase 3: DPU smart Nic

it can be considered as the second generation intelligent network card. CPU is added to the first generation intelligent network card, which can be used to uninstall control plane tasks and some flexible and complex data plane tasks. Currently, DPU smart nic supports PCIe Root Complex mode and Endpoint mode. When configured as PCIe Root Complex mode, NVMe storage controller can be implemented, build a storage server together with NVMe SSD disks. In addition, due to the need of large-scale data center networks, the requirements for lossless networks are stricter. It is necessary to solve the problem of Incast traffic in data center networks, major public cloud vendors have proposed their own solutions to network Congestion and latency problems caused by elephant streams, such as Alibaba Cloud's High Precision Congestion Control (HPCC),AWS Scalable Reliable Datagram (SRD) and so on. DPU smart network card will introduce more advanced methods when solving such problems, such as fundation TrueFabric, which is a new solution on DPU smart network card. In addition, the industry has proposed the development direction of network, storage and security stack uninstallation in the Hypervisor, and IPU, represented by Intel, has been proposed to unmount all infrastructure functions to smart network cards, you can fully release the CPU power previously used for Hypervisor management.

Future DPU smart NIC hardware

as more and more functions are added to the smart network card, its power will be difficult to limit within 75W, thus requiring an independent power supply system. Therefore, there may be three types of smart network cards in the future:

(1) the low-level signal identification between the nic status and the computing service needs to be considered for an independent power supply intelligent nic. During or after the startup of the computing system, whether the smart Nic is in the service state or not needs to be explored and solved.

(2) DPU intelligent network interfaces without PCIe interfaces can form a DPU resource pool, which is specially responsible for network functions, such as load balancing, access control, firewall devices, etc. The management software can directly define the corresponding network functions through the intelligent network card management interface, and provide the corresponding network capabilities as a virtualized network function cluster without the need for PCIe interfaces.

(3) DPU chip with multiple PCIe interfaces and multiple network ports. For example, the fundation F1 chip supports 16 dual-mode PCIe controllers, which can be configured in Root Complex mode or Endpoint mode, and 8x 100g network interface. The PCIe Gen3 x8 interface can support eight Dual-Socket computing servers, and the network side provides 8x 100g bandwidth ports.

As a new type of dedicated processor, DPU will certainly become an important component in future computing systems as the demand side changes, which will play a vital role in supporting the next generation of data centers.

4. Relationship between DPU and CPU and GPU

CPU is the definition of the entire IT ecosystem. Both server-side x86 and mobile-side ARM have built a stable ecosystem, forming not only a technology ecosystem, but also a closed value chain.

GPU is the main chip for performing rule calculation, such as graphics rendering. Through the promotion of general purpose GPU (GPGPU) and CUDA programming framework by NVIDIA, GPU has become the main computing engine in data parallel tasks such as graphics and images, deep learning, matrix operation, etc, and it has become the most important auxiliary computing unit for high-performance computing. Among the top 10 top 500 high-performance computers (supercomputers) released in june 2021, there are six (2nd, 3rd, 5th, 6th, 8th and 9th) NVIDIA GPU is deployed.

Data centers are different from superpolar computers, which are mainly oriented to scientific computing, such as large aircraft development, oil exploration, new drug research and development, weather forecast, electromagnetic environment calculation and other applications. Performance is the main index, the requirements for access bandwidth are not high. However, the data center is designed for commercial applications of cloud computing, and has higher requirements for access bandwidth, reliability, disaster recovery, and elastic expansion, technologies such as container cloud, parallel programming box, and content distribution network are designed to better support upper-layer commercial applications such as e-commerce, payment, video streaming, network disk, and office OA. However, the service overhead of these IaaS and PaaS layers is extremely high. Amazon once announced that the system overhead of AWS is over 30%. If better QoS is required, the overhead on infrastructure services such as network, storage, and security will be higher.

These application types at the basic layer do not match the CPU architecture well, resulting in low computing efficiency. There are two types of existing CPU architectures: Multi-core architecture (several or dozens of cores) and multi-core architecture (more than hundreds of cores). Each architecture supports one of the unique general specification instruction sets, such as x86 and ARM. Taking the instruction set as the boundary, software and hardware are separated and developed independently, which rapidly gave birth to the coordinated development of software industry and microprocessor industry. However, with the increasing complexity of software, more attention has been paid to the Productivity of software, and the discipline of software engineering also pays more attention to how to efficiently build large software systems, rather than how to use less hardware resources to achieve the highest possible execution performance. There is a jokingly called "Andy Bill's law" in the industry, whose content is "What Andy gives, Bill takes away". Andy refers to Andy Grove, former CEO of Intel, bill refers to Bill Gates, the former CEO of Microsoft, which means that the improved performance of hardware is soon consumed by software.

Just as the CPU is not efficient enough in image processing, a large number of basic layer applications are also inefficient in processing, such as network protocol processing, switching routing calculation, encryption and decryption, computing-intensive tasks such as data compression, and data consistency protocols that support distributed processing, such as RAFT. These data are connected to the system either through network IO or through board-level high-speed PCIe bus, and then the data is provided to CPU or GPU through DMA mechanism through shared primary memory. It not only handles a large number of upper-layer applications, but also maintains the infrastructure of the underlying software. It also handles various special I/O protocols. Complex computing tasks make the CPU unbearable.

These basic layer loads provide a broad development space for heterogeneous computing. Unloading these basic layer loads from the CPU can "improve quality and increase efficiency" in the short term and provide technical support for new business growth in the long run. DPU is expected to become a representative chip to undertake these loads, complementing the advantages of CPU and GPU, and establishing a more efficient computing power platform. It can be predicted that the amount of DPU used in the data center will reach the same level as that of the server in the data center, and will be increased by tens of millions every year, including the replacement of the stock, it is estimated that the overall demand in five years will exceed 0.2 billion, exceeding the demand for independent GPU cards. Each server may not have a GPU, but it must have a DPU, just as each server must be equipped with an NIC.

5. Industrialization opportunities of DPU

as the most important component of IT infrastructure, data centers have become the focus of attention of major high-end chip manufacturers in the past 10 years. All major manufacturers have re-encapsulated the original products and technologies with the new concept of DPU and pushed them to the market.

After NVIDIA acquired Mellanox, it launched its BlueField series DPU with the original ConnectX series high-speed network card technology, which became the benchmark of DPU track. Xilinx, as a chip head manufacturer of algorithm acceleration, also took "Datacenter First" as its new development strategy in 2018. The Alveo series accelerator card products are released to significantly improve the performance of cloud and local data center servers. In April 2019, Xilinx announced the acquisition of Solarflare Communications company, combining leading FPGA, MPSoC and ACAP solutions with Solarflare's ultra-low latency network interface card (NIC) technology and application acceleration software, to achieve a new SmartNIC solution. Intel acquired -- Altera, Xilinx's competitor, at the end of 2015 to further improve its hardware acceleration capability on the basis of general-purpose processors. The new IPU product (which can be regarded as the Intel version of DPU) released by Intel in June 2021 integrates FPGA with Xeon D series processors and becomes a powerful competitor of DPU track. IPU is an advanced network device with enhanced accelerator and Ethernet connection. It uses tightly coupled, dedicated programmable kernel to accelerate and manage infrastructure functions. IPU provides comprehensive infrastructure load splitting and can be used as a control point for hosts running infrastructure applications, thus providing an additional layer of protection. Almost at the same time, Marvall released OCTEON 10 DPU, which not only has strong forwarding capability, but also has outstanding AI processing capability.

During the same period, some traditional Internet manufacturers that did not involve in chip design, such as overseas Google, Amazon, domestic Alibaba and other giants, started their own chip research programs one after another, moreover, the research and development focus is on high-performance dedicated processor chips for data processors, hoping to improve the cost structure of cloud servers and improve the performance level of unit energy consumption. Data Research predicts that DPU has the largest application demand in the cloud computing market, and the market size increases with the iteration of cloud computing data centers. By 2025, the market capacity of China alone will reach 4 billion US dollars.

Source: Wang Zhiyu public account

Reprint

Reprint